Same parameters

Topic models are inherently probabilistic, and some algorithms (like LDA) don't always give the same answer. A researcher wanting to validate their findings in one model may want to confirm that there are no significant changes due to chance or hidden parameters, and that any existing changes do not affect the patterns in which they are most interested.

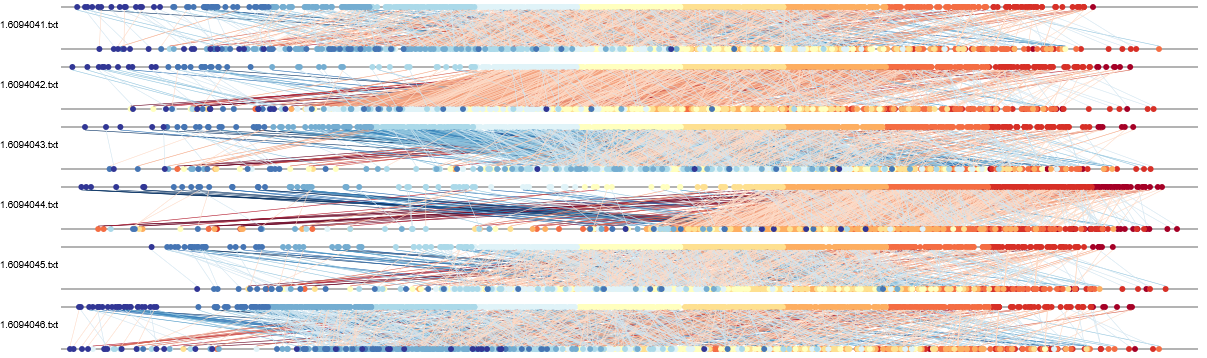

We decided to investigate this phenomenon. To do so, we built two 25-topic models on a collection of visualization abstracts. We found some inconsistencies, which might disproportionately affect different use cases. Specifically, we saw change in both the topic alignment and document similarities. Though the differences in topics may fall within a reasonable allowance (see above), changes in document similarity tended to be much more dramatic (see below). This may suggest that while LDA may work well for topic-based tasks like corpus summarization, it may not be as well suited for tasks that require consistent evaluation of similarity. For further discussion, see the paper.